Daily’s developer platform powers audio and video experiences for millions of people all over the world. Our customers are developers who use our APIs and client SDKs to build audio and video features into applications and websites.

In our AI Week series, we’re introducing two new toolkits, several new components of our global infrastructure, and a series of AI-focused partnerships.

Our kickoff post this week goes into more detail about what topics we’re diving into and how we think about the potential of combining WebRTC, video and audio, and AI. Feel free to click over and read that intro before (or after) reading this post.

AI that gives healthcare providers time back, every day

Telehealth usage grew rapidly during the COVID-19 pandemic, accelerating regulatory, billing, and technology changes that had started already. It's now embedded in healthcare delivery, and can help expand access to care and improve patient outcomes.

From a technology point of view, one powerful benefit of telehealth interactions is that all audio is captured digitally, making it ready to be transcribed and summarized.

Earlier this year, several of our telehealth customers started asking us whether we could help them understand the landscape of HIPAA-compliant AI tools, and how to use those tools as part of their telehealth workflows.

Healthcare providers, our customers told us, spend 10 to 15 hours each week writing clinical care notes. These notes summarize a patient visit, along with the provider’s assessments and recommended next steps. Writing clinical notes is time-consuming; yet in general it doesn’t leverage a provider’s expertise very well, and isn't work that's regarded as interesting or creative. It’s a necessary task that humans can do, and computers couldn’t. . . . Until now.

Our telehealth customers were seeing examples of GPT-4 producing summaries of things like sales calls, customer support interactions, podcasts, and YouTube videos — and asking if an AI Large Language Model (LLM) could produce good first drafts of clinical notes.

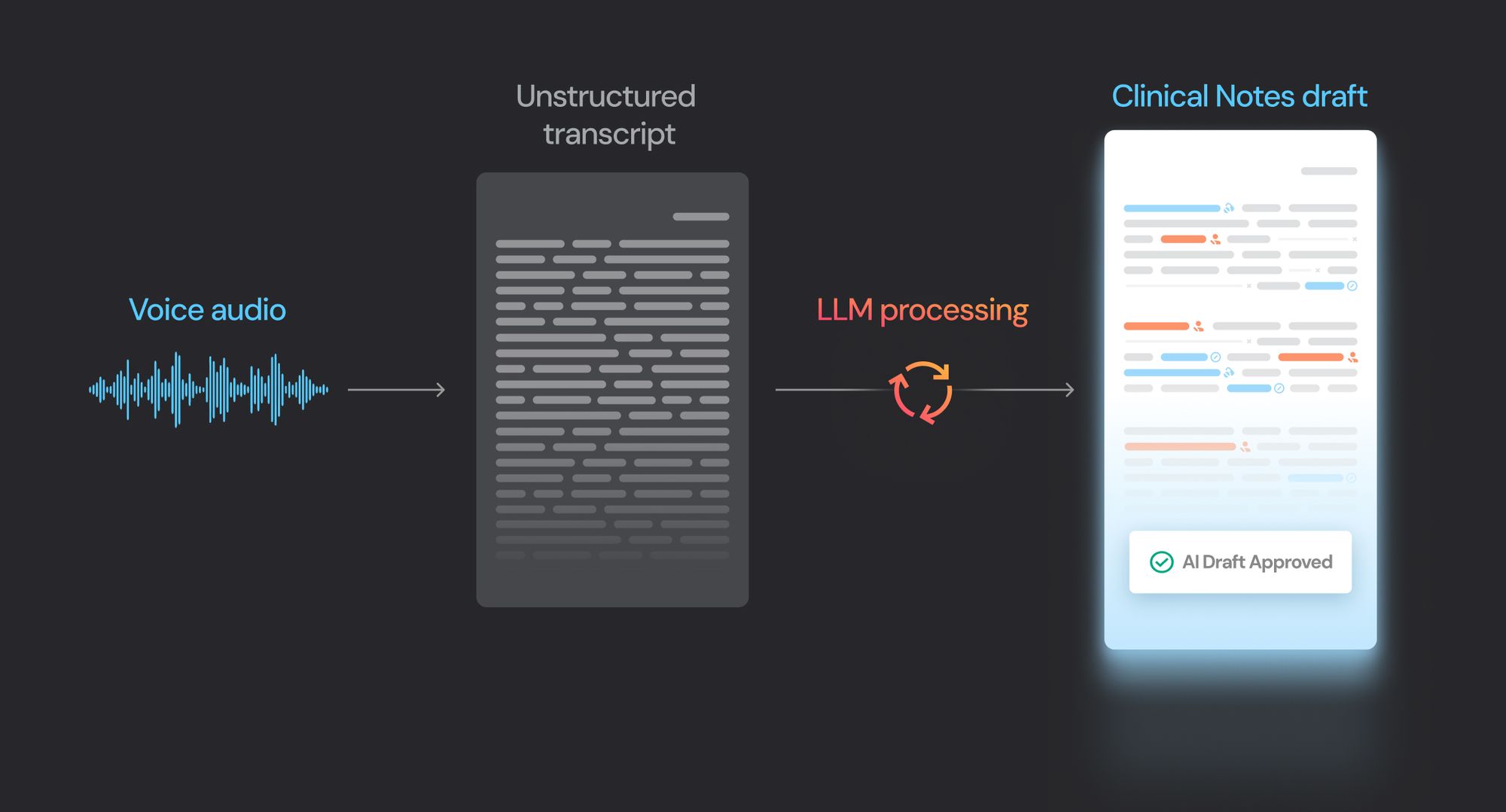

A general approach to summarization and post-processing

Today’s most advanced “frontier,” Large Language Models have an impressive range of use cases. But perhaps the most impressive thing about them, to a computer programmer, is that they are good at turning unstructured input data into structured output. This is a genuinely new capability, and is perhaps the biggest reason so many engineers are so excited about these new tools.

For most use cases that are complex enough to be interesting, the transformation from unstructured data to structured output needs to happen at multiple levels. At the level of content, the Large Language Model processes the input text and summarizes or prioritizes the parts of the text that are most important. At the level of format, the LLM organizes the output into sequences or sections that make sense for the specific use case.

Generating clinical notes is a good example of this. Audio from a telehealth session is transcribed, sent to one or more AI models, and turned into output that has consistent content characteristics and a consistent structure.

An example of SOAP notes draft generation

This is a data pipeline with four steps:

- Capture audio

- Transcribe the audio

- Process and transform the transcription

- Validate and store the output

Next week we'll be writing more about new APIs that support building AI-powered data pipelines like this one. These pipelines take audio, video, and metadata from real-time video sessions as input, and provide hooks for processing this data with Large Language Models and other AI tools and services.

To extend Daily’s “building blocks” into this new world of generative AI, we’re leveraging our deep experience with video, audio, and transcription, along with our global infrastructure that was built from the ground up to route, manage, process, and store video and audio.

What matters most: data privacy, accuracy, reliability, flexibility

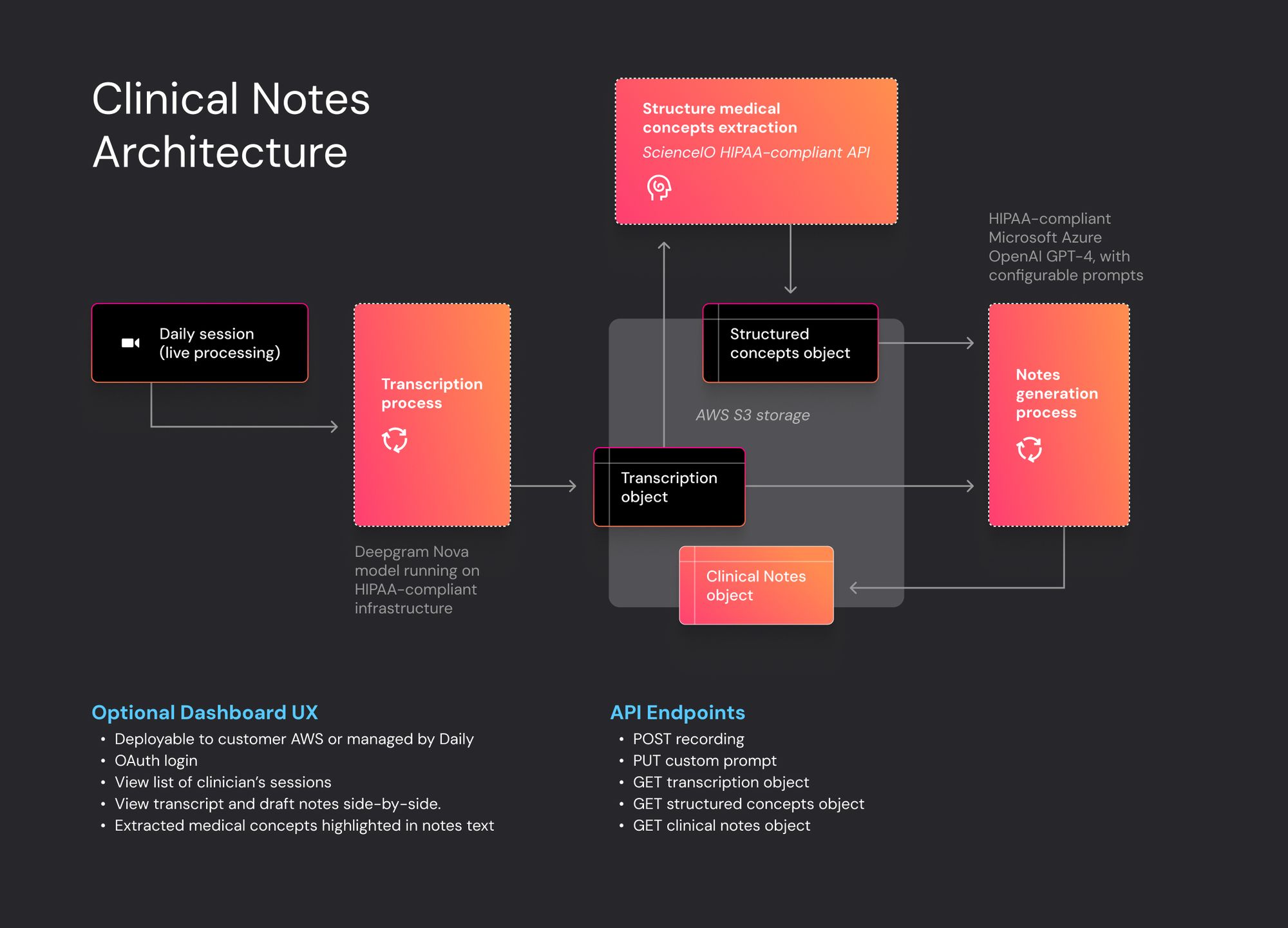

The clinical notes use case is a demanding test for AI workflow APIs.

- All data processing and storage must protect patient privacy and be HIPAA-compliant.

- The quality of the output is highly sensitive to the accuracy of the transcription.

- Clinical notes are a critical part of the healthcare workflow, so the APIs that power them have to work at scale, with predictable latency.

- There are several common output formats for clinical notes, so the pipeline needs to allow LLM prompts and other pipeline steps to be configurable.

We’ve extended Daily’s existing HIPAA-compliant infrastructure to include support for these new workflow APIs. We’ve also signed HIPAA Business Associate Agreements with three new partners. (More on that below.)

Our global WebRTC infrastructure and extensively tuned SDKs are key to delivering the best possible audio as input to the transcription step in the pipeline. Higher quality audio makes possible more accurate speech-to-text transcriptions. Daily’s bandwidth management and very low average first-hop latency everywhere in the world (13ms) guarantee that as many audio packets as possible will be successfully transmitted and recorded.

To perform the transcription step, we are working with our long-time partner Deepgram. Deepgram is an industry leader in both overall accuracy and in the flexibility of their translation models. Daily has offered direct access to Deepgram’s real-time transcription service for several years. We’re now wrapping Deepgram’s batch-mode transcription APIs to make it easy to build transcription-driven post-processing workflows. We’ve also signed a HIPAA BAA with Deepgram.

For the Large Language Model step, we’re working with Microsoft Azure OpenAI and the Microsoft Software & Digital Platforms Group. Microsoft has given Daily early access to the new Azure HIPAA-compliant OpenAI GPT-4 service.

We’ve tested clinical notes generation extensively with GPT-3.5, GPT-4, and the Llama-2 family of models. GPT-4 produces the highest-quality output. For the clinical notes use case, the benefits of using GPT-4 outweigh the higher cost of GPT-4 compared to less powerful models.

To deliver even better results beyond what GPT-4 does by itself, we’ve also partnered with ScienceIO, a company that pioneered using Large Language Models to enrich and structure medical data. We use ScienceIO’s medical structured data APIs in combination with GPT-4.

Deepgram, ScienceIO, and GPT-4 together form a state-of-the-art technology stack for processing audio and generating high-quality output for healthcare use cases.

As AI technology evolves, we expect this pipeline to evolve, too. We’re optimistic, for example, that fine-tuning Llama-2 has the potential to open up additional possibilities for patient and provider workflows beyond clinical notes.

Accessing these new APIs, and what’s next

Our Clinical Notes API for Telehealth is available today. If you have a use case you’re particularly excited about, or any questions, please contact us.

We’re excited about our roadmap for post-processing APIs! We are releasing new features throughout the next few weeks. In addition to audio- and transcription-centric use cases, we have a full set of features in development for video analytics, composition, and editing. We’ll have a bit more to say about that next week.

As always, we love to write and talk about video and audio, real-time networking, and now AI. So if you’re interested in these topics, too, please check out the rest of our AI Week posts, join us on Discord, or find us online or IRL at one of the events we host.