This post is the third in a series about building custom video chat applications that support large meetings using the Daily call object. The features we’ll add specifically target use cases where dozens (or hundreds!) of participants in an up to 1000 person call might be turning on their cameras.

We built Daily on top of WebRTC, a set of protocols for real-time communication across the internet. As a browser-based standard, WebRTC provides tools for handling the wide range of quirks and constraints that transmitting data between different web clients can bring. We get to know those tools at Daily so that you don’t have to; one of our recent favorites is WebRTC simulcast.

After an overview of WebRTC simulcast, this post demonstrates how to request specific simulcast encoding settings via the Daily receiveSettings API to preserve participant bandwidth and CPU on large calls.

WebRTC simulcast

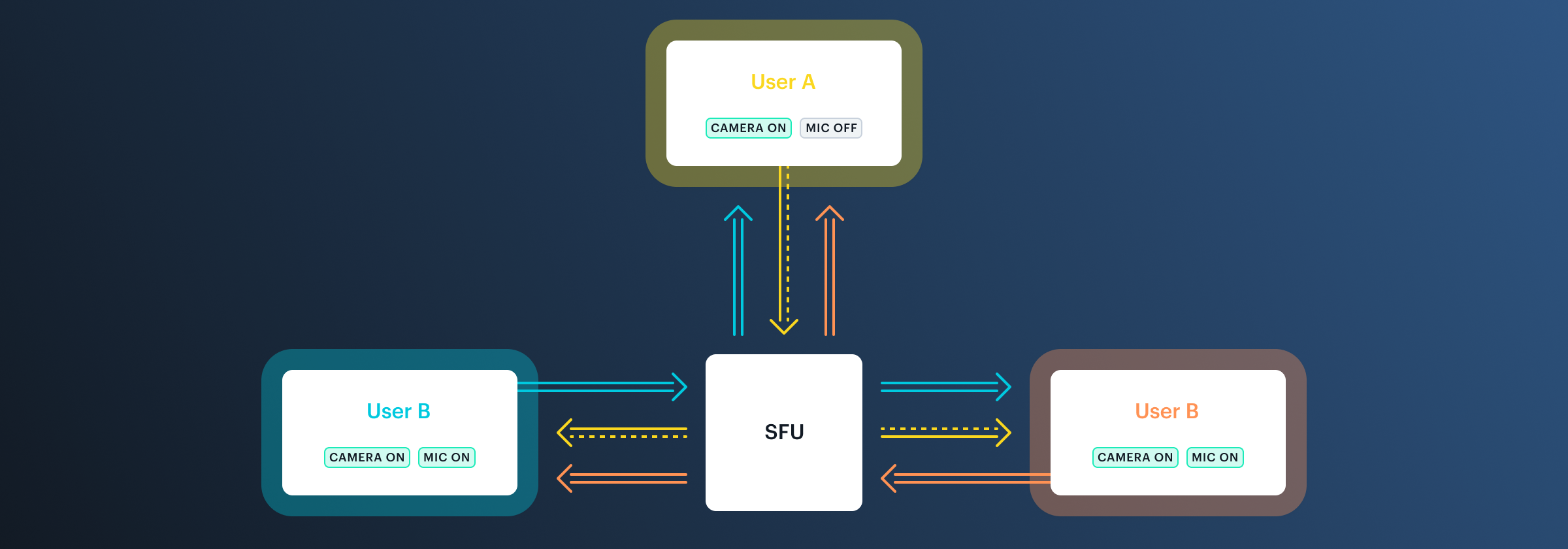

WebRTC calls operate on a publish-subscribe model: participants publish audio, video, and screen data tracks, and subscribe to other participants’ tracks. Once a fifth participant joins a Daily call, instead of direct peer-to-peer (P2P) connections, tracks are first sent to a Selective Forwarding Unit (SFU). From there, the SFU processes, re-encrypts, and routes media tracks to participants, allowing tracks to be "selectively" forwarded.

With WebRTC simulcast, instead of sending a single track to the SFU, a publishing participant’s web client sends the same source track at a few different resolutions, bitrates, and frame rates. Each of these groups of settings is known as a simulcast layer.

The default layers sent on Daily desktop calls are:

| Layer 0 | Layer 1 | Layer 2 | |

|---|---|---|---|

| Bitrate (bps) | 80,000 | 200,000 | 680,000 |

| Frame rate (fps) | 10 | 15 | 30 |

| Resolution (width x height) | 320x180 | 640x360 | 1280x720 |

The publishing participant sends all three layers to the SFU. The SFU decides which layer to forward to the subscribed participants depending on the subscriber’s network quality. If the network is very strained, it sends the lowest quality layer 0.

To get a sense of the difference between these layers, you can clone the daily-demos/track-subscriptions repository and run it locally. cd into the directory, add environment variables, then:

yarn

yarn dev

Start a call. Copy the URL at the bottom left and paste it into a new tab to join the call as a second participant. Return to the original localhost tab, and click "Auto layers" in the bottom right box. Now, the box should read "Manual layers", and you should be able to click the hamburger icon on the second participant video tile to toggle the different layers.

🔥Tip: Please try this at home! We tested out a few gifs, but none do the layers justice.

But wait, didn’t we say that the SFU decides on a layer to forward automatically? We did! The demo uses the Daily receiveSettings API to override the default forwarding and request a specific layer instead.

That toggling feature was mostly useful for demonstration, so we won’t get into the weeds of it here (though you’re welcome to explore the demo code!). Instead, the tutorial coming up next focuses on a practical receiveSettings use case.

Add dynamic simulcast encoding to a participant video grid with the Daily receiveSettings API

So far in our series we've built a paginated grid to handle large calls that have dozens or even hundreds of call participants. We also added manual track subscriptions to avoid receiving tracks from participants who are off screen to help improve our browser performance.

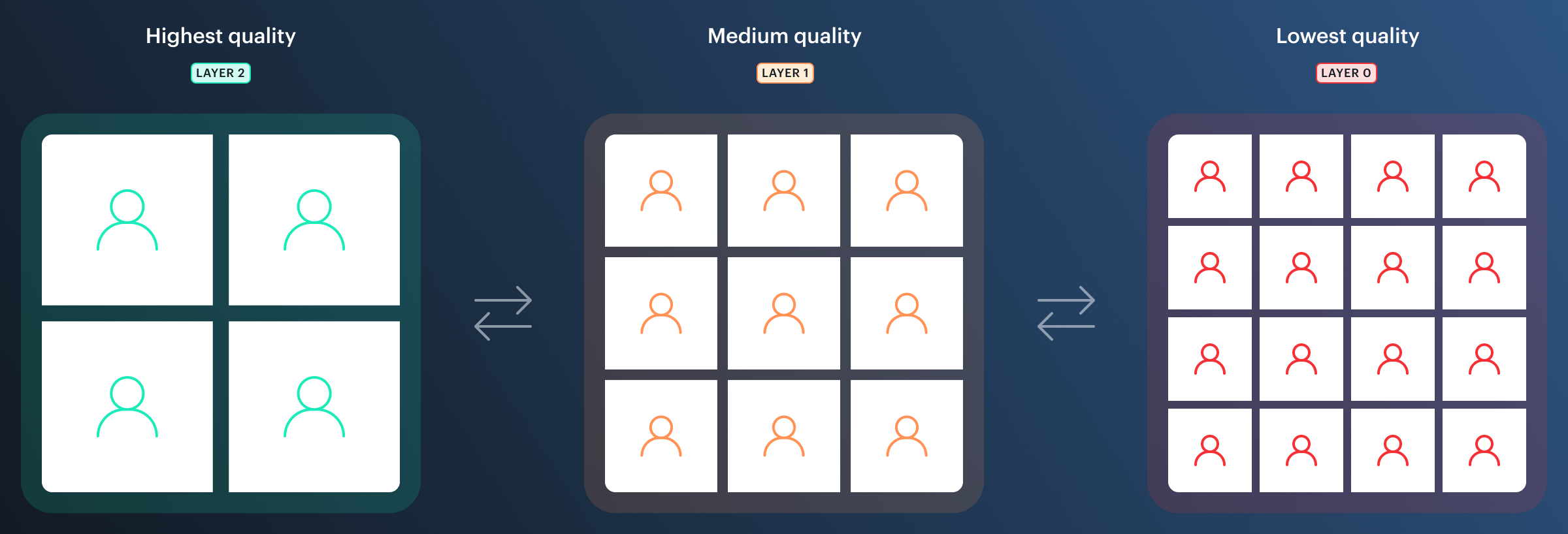

As our final feature for scaling large meetings, we’ll add selective simulcast encoding to the React app we’ve been building. There’s no need to receive the highest quality tracks when many videos take up screen space. Lower quality videos not only save downstream bandwidth, but also can dramatically impact CPU performance since they require fewer resources to render.

At a high level, to implement selective simulcast encoding we’ll update the app logic to request a specific track layer from the SFU depending on the number of video tiles on the page using React hooks. Let’s get started.

First, we’ll listen for changes to the number of visibleParticipants in <PaginatedGrid />:

// PaginatedGrid.js

useEffect(() => {

if (!autoLayers) {

return;

}

const count = visibleParticipants.length;

const layer = count < 5 ? 2 : count < 10 ? 1 : 0;

const receiveSettings = visibleParticipants.reduce(

(settings, participant) => {

if (participant.id === "local") return settings;

settings[participant.id] = { video: { layer } };

return settings;

},

{}

);

updateReceiveSettings(receiveSettings);

}, [visibleParticipants, autoLayers, updateReceiveSettings]);

When changes are detected, this useEffect counts the number of visibleParticipants and identifies a layer to request based on that count. Then, it creates a settings object whose keys are visibleParticipant ids (sparing the local participant), and whose values are objects specifying the new video simulcast layer to request. The new settings are then passed to updateReceiveSettings, which is imported from ParticipantProvider:

// ParticipantProvider.js

const updateReceiveSettings = useCallback(

(settings) => {

if (!callObject) return;

callObject.updateReceiveSettings(settings);

},

[callObject]

);

updateReceiveSettings makes sure the new settings are reflected in the Daily call object. Once the call object has the latest changes, the new state needs to also be reflected in the user interface. To solve for that, we add an event listener that dispatches an action to ParticipantReducer when receive settings change:

// ParticipantProvider.js

useEffect(() => {

if (!callObject) return;

function handleReceiveSettings({ receiveSettings }) {

dispatch({

type: UPDATE_LAYER,

receiveSettings,

});

}

callObject.on("receive-settings-updated", handleReceiveSettings);

return () =>

callObject.off("receive-settings-updated", handleReceiveSettings);

}, [callObject]);

The reducer updates the app state to include the new requested layers:

// participantReducer.js

case UPDATE_LAYER: {

const newState = prevState.participants.map((p) => {

return { ...p, layer: action.receiveSettings[p.id]?.video.layer };

});

return { ...prevState, participants: newState };

}

For a reminder on how state gets passed through all the components, check out the first post in this series.

The final layer

We’ve now introduced dynamic simulcast layers! This is a useful feature not only in our demo app that updates the layer depending on the number of participant tiles, but also any time the participant video tile is only a small part of the app (like the Daily Collab Chrome extension for Notion), or for mobile calls on small screens.

We hope you enjoyed this large meeting series. We invite you to explore all of the sample code (see if you can spot how we implemented layer toggling!). As always, please reach out with any questions or suggestions for more large meeting focused features we should add to the repo.