This post is part one of a series on how to build an app with spatialization features using Daily's real time video and audio APIs.

Introduction

In an increasingly distributed world, there’s a desire to simulate physical spaces for people to meet each other remotely. Applications of spatialization setups include creating virtual offices for remote teams to collaborate online, community hangout spaces, and more.

We’ve gotten lots of questions about how developers can use Daily to build spatial audio and spatial video applications.

Note: When we talk about spatial audio in the context of this demo, we are referring to updating the user’s audio relative to their avatar’s navigation through our world space via the Web Audio API. We are not referring to building soundscape experiences that tools like Dolby Atmos enable.

In this spatialization series, we will present a demo showcasing one approach to building an app with spatialization features using our daily-js API.

In part one (this post!), we’ll provide an overview of what we’re building. We will go through the main application structure, the tech stack we’ve chosen for this demo, and the most relevant parts of the Daily API. In subsequent posts, we’ll go through the implementation details and lessons we learned along the way.

What we’re building

A client-only app with a 2D world which users can traverse with arrow keys. Users hear and see each other as they come within proximity in the world.

Users can broadcast to all other world members, as well as join smaller dedicated spaces to converse in more focused groups (all within the same Daily room…for now).

What we’re not building

An infinitely scalable world. In this iteration of the demo, we’ve focused on highlighting a potential implementation of core spatialization features with a client-only approach. We’ll definitely be covering the core basics of optimizing such an application in relation to the Daily API, but won’t get stuck in the weeds too much just yet.

But don’t worry! We have more spatialization content planned for the future, including expanding this demo with more advanced performance enhancements and features.

Getting started

Even though our blog post series will be coming out over the next few weeks, we've made the completed demo app available on GitHub.

First, you will need a Daily account and a Daily room.

To clone and run Daily’s spatialization demo, run the following commands in your terminal:

git clone git@github.com:daily-demos/spatialization.git

cd spatialization

git checkout tags/v1.0.0

npm i

npm run build

npm run startThen, go to localhost:8080 in your browser.

The stack

daily-jsto handle all of the WebRTC call functionality.- TypeScript bundled with webpack.

- Web Audio API to manipulate audio properties in the world.

- PixiJS to help us with rendering the world.

standardized-audio-contextpackage, basically a wrapper around the native audio context elements which also helps ensure consistent behavior between browsers and enables mocking for tests.

The application structure

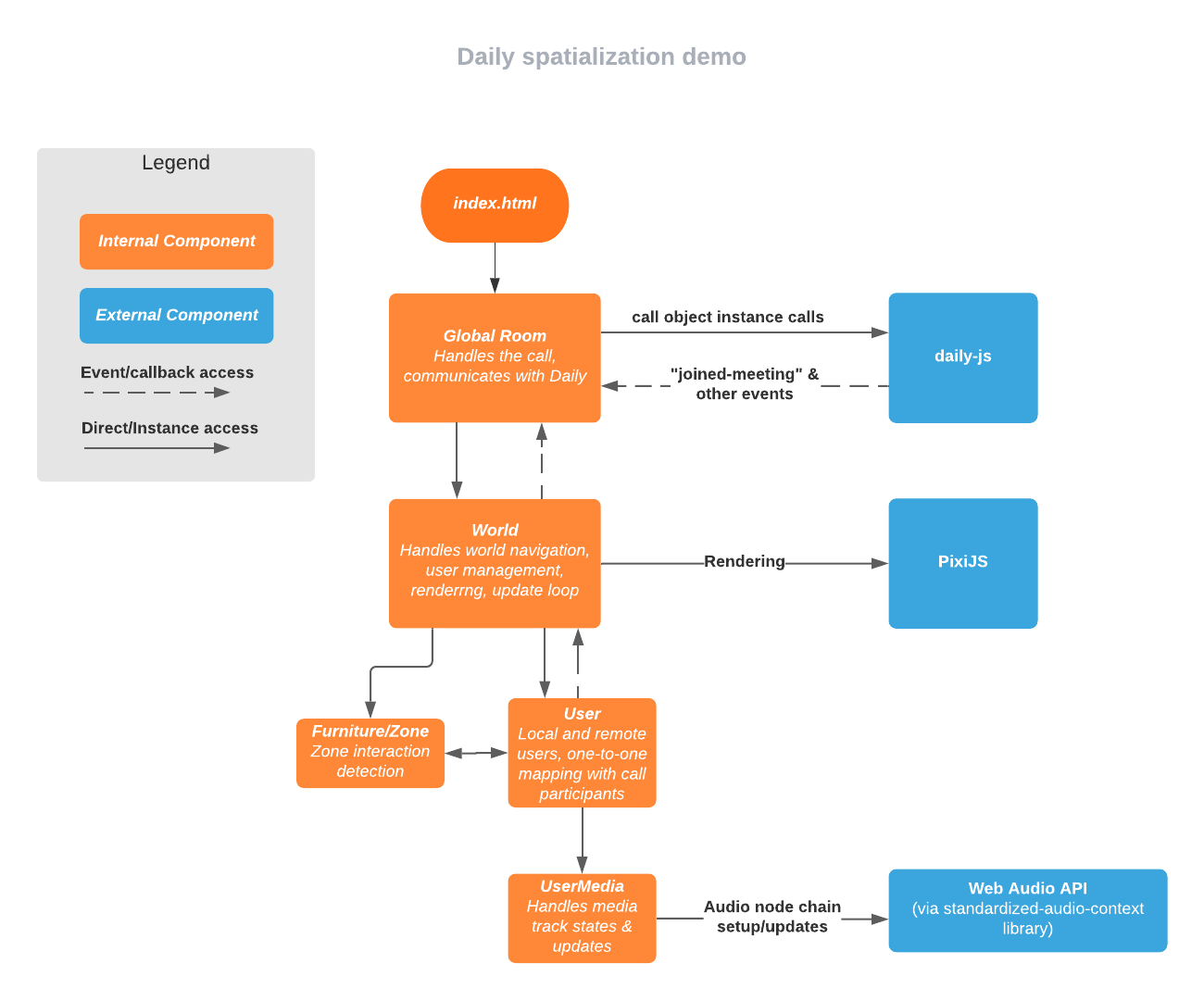

Let’s go through a high level overview of our spatialization demo. Fear not; we’ll dig into the details in subsequent posts.

The entry point of the demo is an index.ts file, which instantiates a single global Room. The room contains a Daily call object and is responsible for all communication with Daily.

We set up the call object and all relevant Daily event handlers in the Room constructor. When the user submits the entry form with a room URL, the call is joined.

After the user is confirmed to be in the call, the World we instantiated when importing our Room is configured and "started". Part of this startup includes setting the world size, creating relevant sprite containers, and populating the world with predefined "zones".

At this point, we also create the local user within the world and start the update loop, within which the application listens for user navigation events, updates any remote user zones or positions, and checks for collision with any objects (including other zones).

Here is a high level illustration of the application structure:

Daily API

The most relevant parts of daily-js which we will use in this demo are:

- The Daily call object

"app-message"events for zone and positional data, handling them and sending them viasendAppMessage()"network-connection"events to detect when we switch between P2P and SFU modessubscribeToTracksAutomaticallycall object configuration property, to prevent every participant from automatically being subscribed to every other participant’s trackssetSubscribedTracksparticipant update action, to subscribe to participants who are in the same proximity or focus zone as the local usersetBandwidth()instance method to lower track constraints in global traversal modecamSimulcastEncodingscall object property, to define a single custom simulcast layer and prevent lower layers from being selected after setting the bandwidth

We will also hook into various meeting join and leave events. We’ll go through the handlers for those once we dig into the implementation in the next post.

Web Audio API

The Web Audio API will allow us to apply effects to audio streams in our 2D world. The Web Audio API is an interface that allows developers to control audio sources and outputs in the browser. It enables manipulating audio tracks by chaining together nodes which apply different kinds of effects.

Specifically, we’ll be using the API to control the volume and pan of audio the local user receives from remote participants.

The most relevant parts of the Web Audio API used in this demo are:

AudioContext, to create our nodes, including source and destination nodesGainNode, to fade participant volume in and out as users navigate in the worldStereoPannerNode, to emit sound from predominantly the left or right speaker depending on the user’s position in relation to the local participant. We will also go over some gotchas with the reliability of this node as it relates to a known Chromium echo cancellation issue and the listener’s hardwareDynamicsCompressorNode, to help prevent audio clipping and distortionMediaStreamAudioSourceNode, the entry point of our web audio node chainMediaStreamAudioDestinationNodethe final node in our node chain

What’s next?

This concludes an introductory overview of our spatialization demo. We hope you’re as excited to dig into the implementation as we are.

In the next post, we will walk through the aforementioned Room class and see how it interacts with Daily and the world.