A couple of months ago, one of the engineers at Daily asked if we had a policy on the use of AI tools like GitHub Copilot and ChatGPT for use in engineering. We did not, but the question alone made it clear that we needed one, stat. Our team weaves security into every feature and pays a lot of attention to our own ethics and efficiency, too; without any guidance on AI tools, we risked undermining all of those important factors — either because people would inadvertently misuse new tools or would avoid them unnecessarily. So by the end of the day, we’d formed a temporary working group, with representatives from across engineering, to evaluate the safety and utility of various AI tools.

Moishe Lettvin, a backend engineer, presented the group’s findings and recommendations recently at one of our weekly Eng All Hands meetings. We thought engineers outside Daily might be interested in this topic, so below is a summary of the group’s conclusions and an edited video of his presentation.

Before I share the video — which doesn’t show Moishe at all–here’s a screenshot of what he looked like that day. You’re probably wondering if Moishe’s background is virtual; it is not — he just works in a very clean, windowed basement.

An AI-powered summary of the presentation

The bullets below were generated by an internal tool that uses a combination of Deepgram, Whisper and ChatGPT-4 to summarize meeting content. I then edited them. Moishe’s talk goes into more detail about each. He also raises some questions for consideration but doesn’t answer them explicitly — reflecting the state of our internal discussions.

Key risks of third-party AI tools

All tools have benefits and tradeoffs. Here are some of the biggest likely risks the working group identified for AI tools specifically.

- Ethics: There may be ethical implications of using code trained on public repositories without the explicit permission of the authors.

- Leaking code and secrets: Beware unintentional data leakage.

- Inaccurate results: AI tools are probabilistic and sometimes inaccurate, which poses risk around the reliability of their output.

- Noise: The quantity of output generated by these tools can be large, which is not always beneficial.

Potential rewards

- Efficiency: AI tools like Copilot can provide significant time savings by speeding up the coding process.

- Understanding AI strengths and limits: Using AI tools can help the team gain a tactile understanding of the limitations, failure modes, and strengths of these technologies, which can ultimately help us develop better products.

Recommendations for engineers

The following recommendations are specific to Daily engineers. Mileage may vary for your organization, of course.

- Use your judgment.

- Prefer established and trusted organizations for AI tools. For example, Github is a mature company whose products we already use, so Copilot is a reasonable option under this guideline.

- Pay attention to what data is being transmitted and how.

- Understand how the output of the tools will be used.

- Will the "consumer" of the output know it was generated with an AI?

- How much trust should be placed in it?

- How correct does it need to be?

- How correct will it seem?

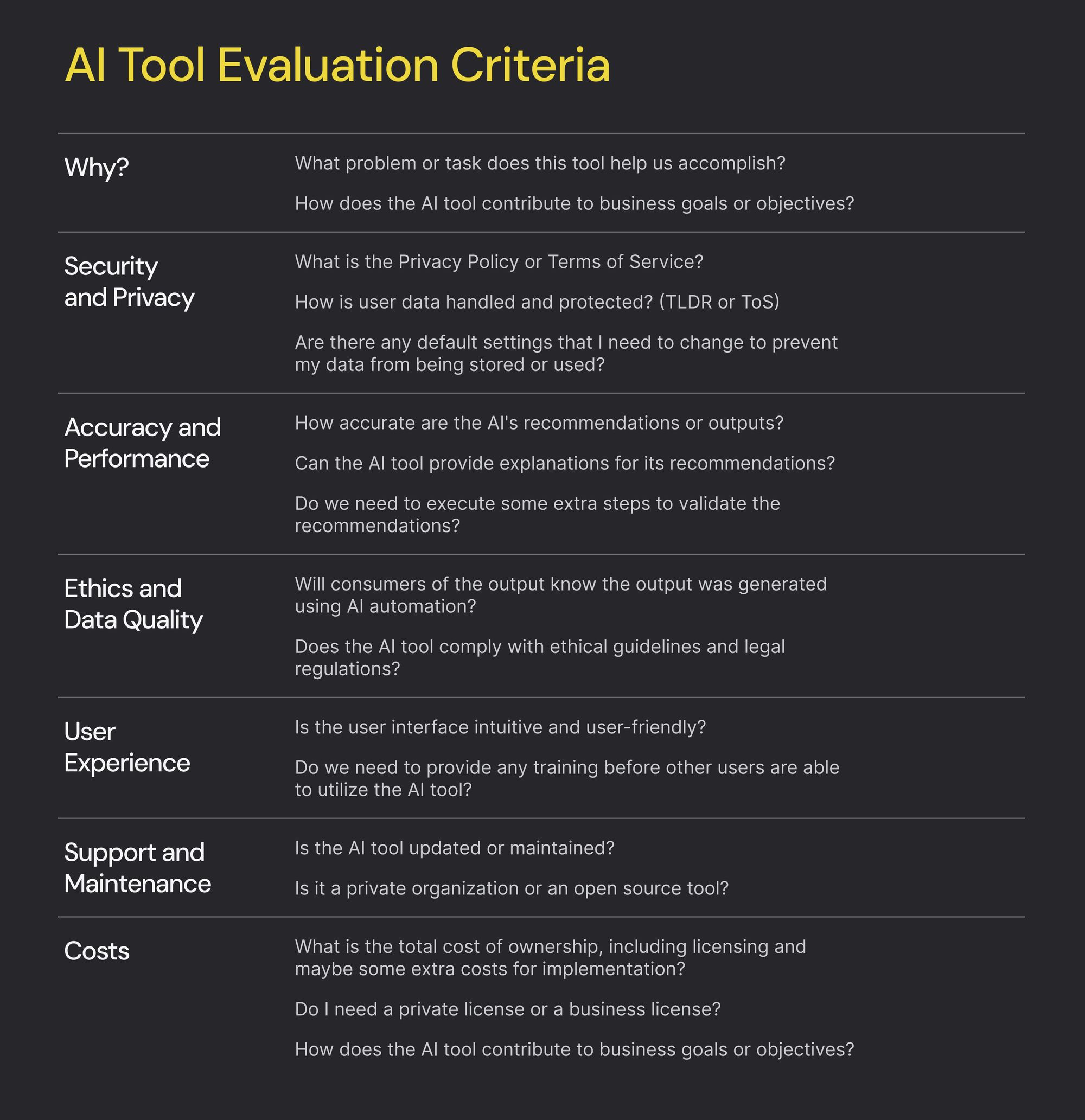

- When you’re considering a new tool for evaluation, use a handy checklist like this one we created:

What’s your organization doing with AI tools?

We’d like to hear how your teams are evaluating AI tools your engineers might use. Come chat with us in our Discord community.