Daily’s developer platform powers audio and video experiences for millions of people all over the world. Our customers are developers who use our APIs and client SDKs to build audio and video features into applications and websites.

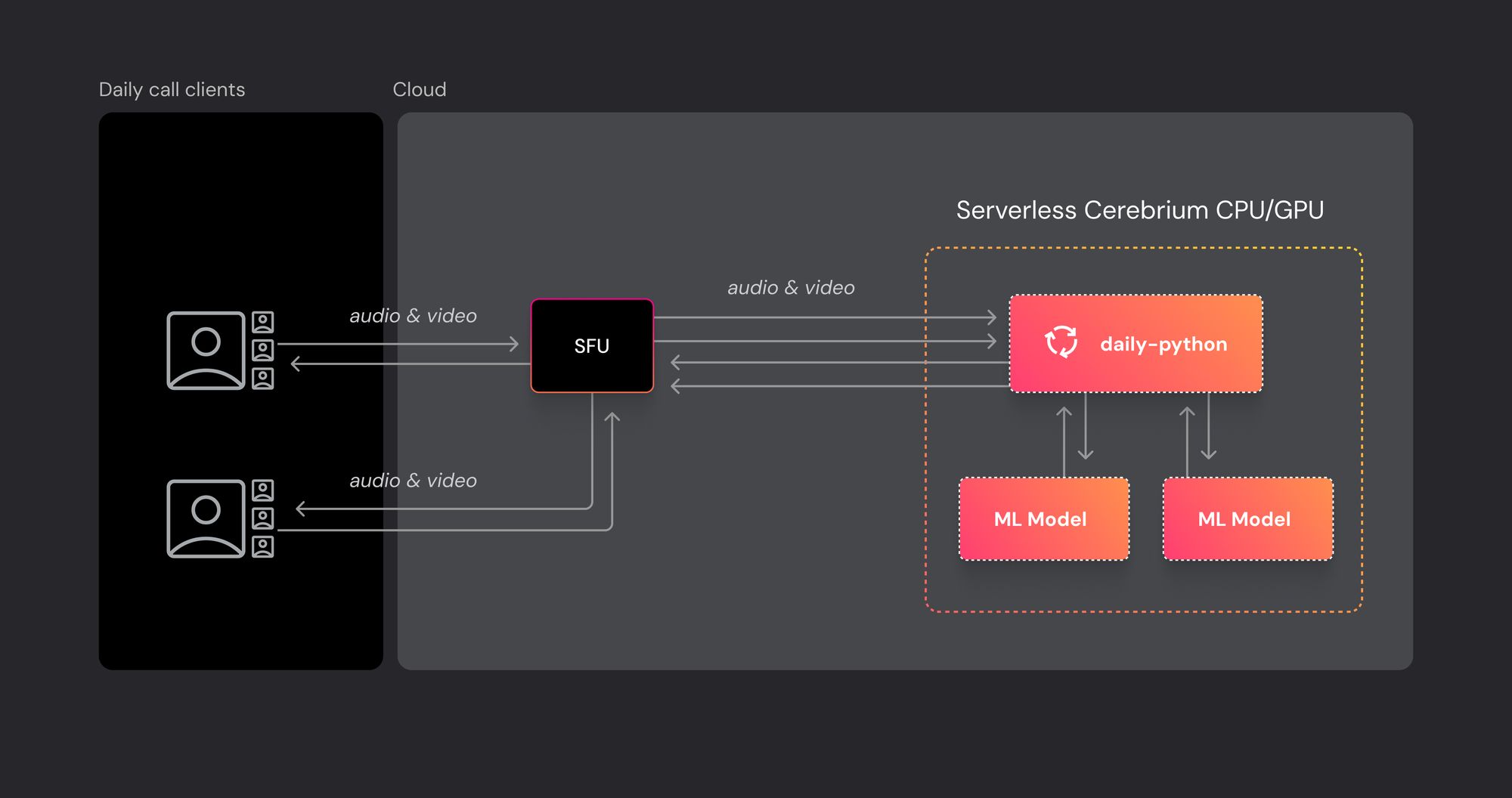

Our AI Week series looks at how developers can combine real-time video with AI as they build with our platform. Today we're announcing a partnership with Cerebrium, a serverless infrastructure platform for training, deploying, and monitoring machine learning models. You can now run daily-python seamlessly as part of a Cerebrium application. You can read more about the Daily Python SDK here.

Learn more about the topics we’re covering in this AI Week series, and how we think about the potential of combining WebRTC, video and audio, and AI, in our kickoff post. Feel free to click over and read that intro before (or after) reading this post.

As part of our ongoing AI Week series, we are thrilled to unveil our latest partnership with Cerebrium – a serverless deployment option for the daily-python SDK. Cerebrium is making it easy to run daily-python alongside hosted AI models in the same container. This development completely eliminates the need of managing underlying infrastructure, giving engineers easy access to hosted ML capabilities along with voice and video streams.

Elevate your apps with AI: running daily-python on serverless infrastructure

Bringing AI models into the world of voice and video applications at scale can feel daunting, particularly when these AI models require intensive computational resources. Tasks such as object tracking, object segmentation, video analysis, or speech transcription demand the right mix of I/O, memory, CPU, GPU resources to ensure real-time performance.

With Cerebrium’s serverless deployment, you can sidestep the intricacies of scaling the CPU/GPU and the Kubernetes resources. Plus, you can easily deploy off-the-shelf ML Prebuilt models that are fine-tuned on your data. Cerebrium already offers an extensive library of over 20 Prebuilt models. This off-the-shelf deployment already contains a broad range of LLMs and generative voice and video, such as:

- Llama 2

- GPT4All

- OpenAI’s Whisper

- Meta Seamless

- ControlNet

- Stable Diffusion

- Meta’s Segment Anything

- And more

Here is what daily-python within Cerebrium brings to developers:

- Real-time media processing: the ability to send media from the call to an ML model at up to 15 FPS

- Quick model inferences: receive inferences from the model in 100s of milliseconds, since the model is co-located

- Instant action: as soon as the inference is received from the model, be able to send the modified audio and video or a notification message into the call

For those eager to dive into practical examples, the Cerebrium engineering team has thoughtfully prepared an example repository of daily-demos for you to play with. There are currently three demos:

content-moderationuses OpenAI's CLIP model.pet-object-detectionuses a Ultralytics’ YOLO v8 model for object detection.transcriptionuses OpenAI's Whisper Model.

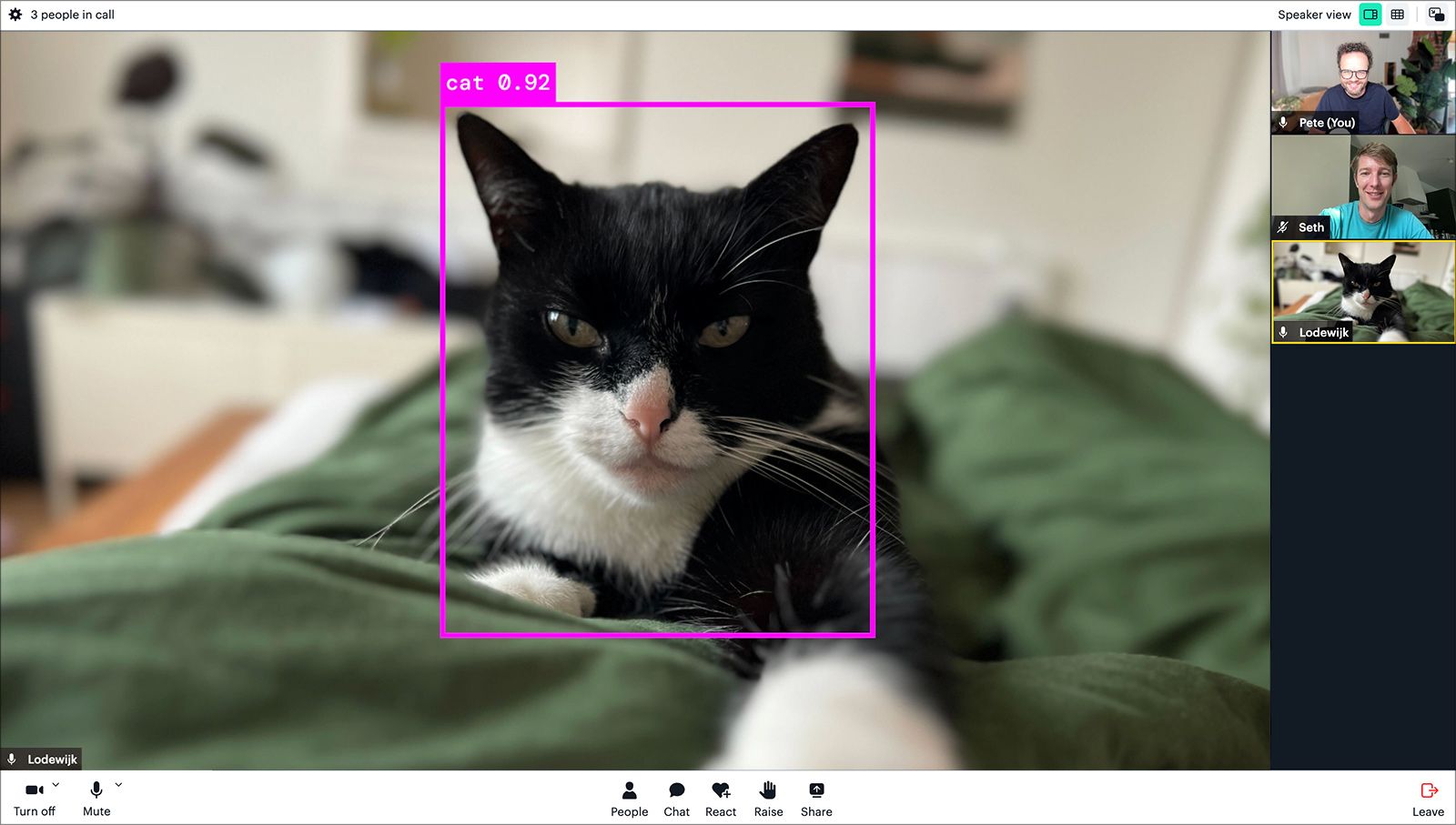

My favorite is the pet detection demo. The server-side does the following:

- Join the call with

daily-python, subscribing to video streams from each participant - Detect pets by passing video frames from each participant to the YOLO v8 model

- When a pet is detected, the model returns the frame with a bounding box

daily-pythonsends the frame with the bounding box into the call, allowing everyone to enjoy a dedicated feed of pets!

You can achieve all of this in roughly 15 lines of code and you will not have to fret about latency or scaling!

daily-python detects a cat in one of the frames, sends it as active speaker# main.py

def predict(item, run_id, logger):

item = Item(**item)

# initialize daily-python

Daily.init()

# initialize the AI model

pet_detector = PetDetection()

#On startup, connect to room with username Pet Detector

bot_name = "Pet Detector"

client = pet_detector.client

client.set_user_name(bot_name)

# join daily call with room URL

pet_detector.join(item.room)

# iterate on video frame from each participant or user

for participant in client.participants():

# ignore local frames, these are self-created pet-detected frames

if participant != "local":

# send participant frames to the pet detector

client.set_video_renderer(participant, callback = pet_detector.on_video_frame)

# pet_detection.py

# Load the model weights

pet_detection = YOLO("weights.pt")

class PetDetection(EventHandler):

def on_video_frame(self, participant, frame):

self.frame_count += 1

if self.frame_count >= self.frame_cadence:

self.frame_count = 0

self.queue.put(frame.buffer)

worker_thread = threading.Thread(target=self.process_frame, daemon=True)

worker_thread.start()

def process_frame(self):

buffer = self.queue.get()

IMAGE_WIDTH = 1280

IMAGE_HEIGHT = 720

image = Image.frombytes('RGBA', (IMAGE_WIDTH, IMAGE_HEIGHT), buffer)

image = cv2.cvtColor(np.array(image), cv2.COLOR_RGBA2BGR)

detections = pet_detection(image)

if len(detections[0].boxes) > 0:

plotted_image = plot_bboxes(image, detections[0].boxes, score=False)

plotted_image = cv2.cvtColor(plotted_image, cv2.COLOR_BGR2RGB)

is_success, buffer = cv2.imencode(".png", plotted_image)

image_stream = io.BytesIO(buffer)

self.camera.write_frame(Image.open(image_stream).tobytes())

# Indicate that a formerly enqueued task is complete

self.queue.task_done()Ready to get started with Cerebrium? Here's how:

- Sign up for a Daily account. You will need to create a Daily room through the dashboard or programmatically using your Daily API key.

- Sign up for an Cerebrium account, since we will need to get our API keys to deploy this example.

- Git Clone the repository and install the necessary packages by running these commands in the terminal

pip install --upgrade cerebrium.cerebrium login <private_api_key>.cerebrium deploy --config-file ./config.yaml petdetection. This already bundlesdaily-python.

- Manually invite the pet bot to the call by using REST API.

curl --location --request POST 'https://run.cerebrium.ai/v3/p-xxxx/pet-detection/predict' \.

--header 'Authorization: <JWT_TOKEN>' \

--header 'Content-Type: application/json' \

--data '{"room": "Your Daily Room URL"}'

This manual step can be later automated by listening to theparticipant-joined webhook. - Invite people to join the created Daily room URL, especially those that have pets available at hand!

One of our north stars is developer time to value, in this case, how much time it takes for a developer with a cool application for an ML model to build, deploy, and start field testing. With our announcement today, what used to take developers weeks can now take minutes. You can get started with Cerebrium’s free tier and Daily’s 10,000 free monthly minutes.

Let us know how we can help support you as you build. Reach out to our developer support or join our Discord community. You can always find us online or IRL at one of our regularly-hosted events. And check back for more this week in our AI Week series.