Today we’re sharing the new NVIDIA AI Blueprint, Voice Agents for Conversational AI, powered by Pipecat and NVIDIA NIM. Developed in collaboration with NVIDIA, this blueprint shows how companies can build state-of-the-art agentic experiences with Pipecat and NVIDIA AI.

Pipecat is the world’s most widely used agentic framework for real-time and conversational AI, and is fully open source. Pipecat is maintained by Daily, along with a large developer and contributor community. Pipecat now includes support for NVIDIA NIM microservices, and is available with NVIDIA AI Enterprise for production deployments.

“NVIDIA and Daily are enhancing the deployment of voice AI agents at scale,” said Justin Boitano, vice president of Enterprise AI Software Products at NVIDIA. “This collaboration enables developers to create sophisticated, real-time conversational AI experiences with unprecedented ease and flexibility.”

Pipecat is an open source, vendor-neutral orchestration layer for voice and multimodal AI use cases such as:

- Customer service agents

- LLM-powered copilots

- Virtual video avatars

- Device and IoT interfaces

- AI telephone receptionists

Pipecat includes multi-turn conversation context management, higher-level abstractions built to help developers take advantage of today's powerful LLM reasoning capabilities, and event bridges for function calling and built-in tools.

NVIDIA NIM, part of the NVIDIA AI Enterprise software platform, provides a streamlined path for developing AI-powered enterprise applications and deploying AI models in production.

The voice agent blueprint is a reference workflow to help developers get started with Pipecat and NVIDIA NIM microservices. Developers easily can deploy this configurable voice agent on premises or in the cloud. Launch the blueprint to spin up a demo agent. You can talk with agent about some exciting new NVIDIA releases, and it also can demonstrate tool use (function calling) in a voice AI context.

The voice agent uses the:

- NVIDIA Riva Parakeet NIM microservice for automatic speech recognition

- NVIDIA Llama 3.3 70B Instruct LLM NIM microservice

- NVIDIA FastPitch-HifiGAN NIM microservice for voice generation

Pipecat provides developers with state-of-the-art implementations of critical, real-time AI requirements, such as phrase endpointing (turn detection) and turn-by-turn context management. The Pipecat architecture is designed to be modular, composable, and ultra-low-latency.

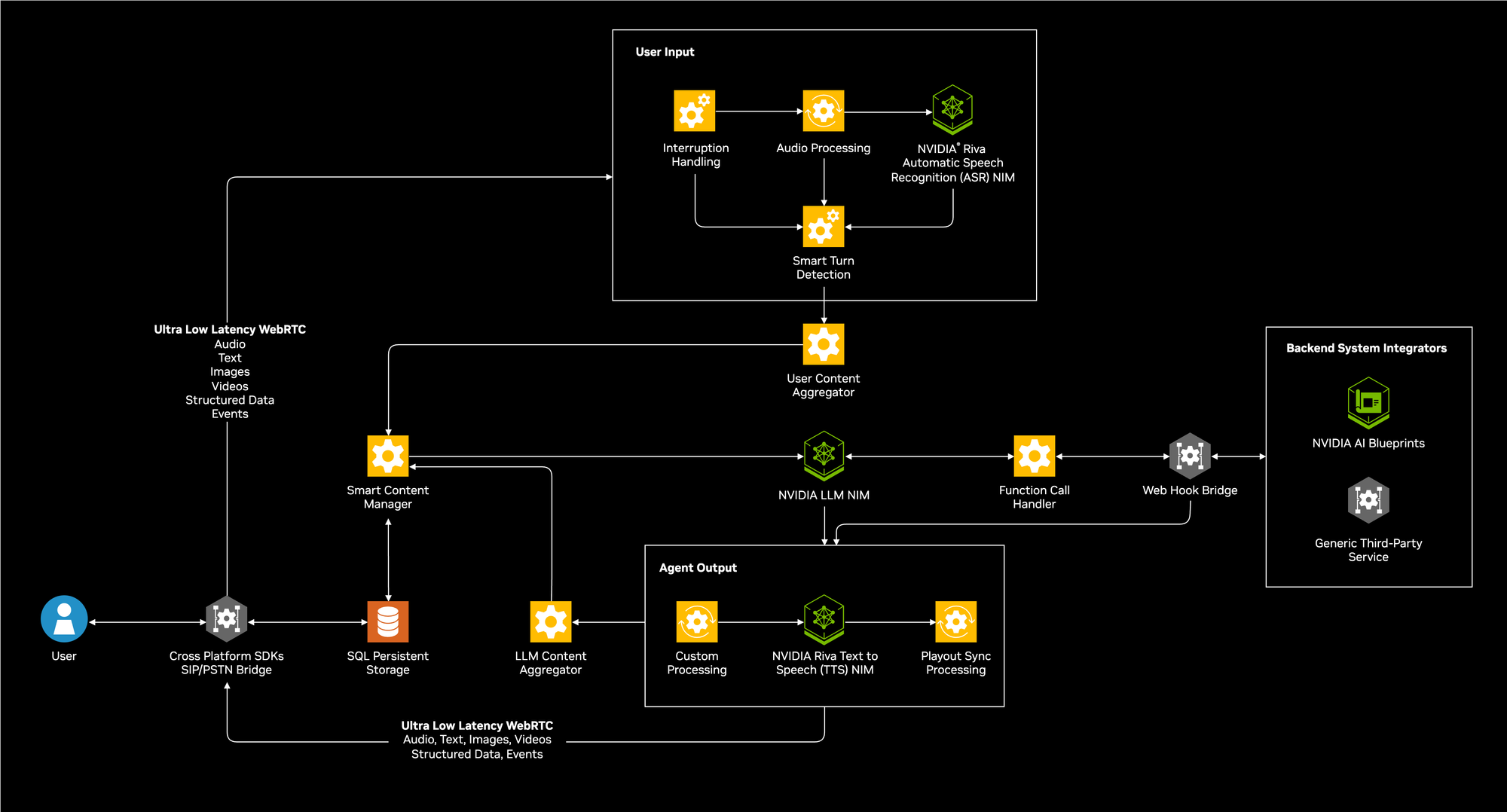

Architecture

Here is an architecture diagram of the configurable voice agent blueprint:

The voice agent can run on premises, in the cloud, or locally for development and testing.

The user talks to the voice agent using Pipecat's telephony (PSTN and SIP) connectors, or Pipecat's cross-platform SDKs for the Web, React, Android, iOS, Python, and C++.

To deliver a natural conversation experience, the agent must respond at human conversational speed and respond naturally to interruptions. The User Input block of the architecture diagram highlights the components that provide fast and natural conversational behavior. Pipecat's advanced audio processing ensures that voice agents work well even when the user is in a noisy environment, such as an airport or a room with television playing audio in the background.

The core of the conversational experience is the LLM NIM microservices. The Pipecat framework makes it easy for developers to change one line of code to pick a different LLM. Pipecat supports all LLM NIM microservices, including fine-tuned and custom models.

LLM tool use is critical for production of conversational AI. Pipecat provides higher-level abstractions that help developers build reliable AI agents on top of LLM function calling and built-in tool capabilities. Function calls can be handled locally or automatically translated into network requests.

Voice generation is performed by the NVIDIA FastPitch-HifiGAN NIM microservice. Pipecat also supports all of today's major TTS models and services.

In a voice agent, it is critical that the conversation context manager tracks what the user actually heard and includes only that speech/text in the context. Pipecat automatically tracks audio playout to correlate audio and text timestamps for context management.

Optional features that can be enabled for this voice agent include persistent conversation storage so that user sessions can span multiple calls, and integration with backend systems (proprietary customer infrastructure) and external AI services such as hosted RAG platforms.

Conclusion

Pipecat with the NVIDIA AI Enterprise platform and the NIM microservices provides a full stack enterprise-ready, configurable, highly flexible solution for voice and multimodal conversational AI agents.

Building on this solid technology base gives developers immediate access to:

- More than 40 AI services and APIs

- State-of-the-art implementation of low-level conversational AI foundational components such as phrase endpointing, interruption handling, and noise reduction

- State-of-the-art implementations of conversational AI building blocks such as smart context management, local and network function calling, and handlers for LLM built-in tools

- Telephony (SIP and PSTN), WebSocket and WebRTC network transport

- Flexible deployment options including on-prem and VPC architectures

- The NVIDIA API catalog, which includes NVIDIA NIM microservices for the latest AI foundation models and NVIDIA AI Blueprints to accelerate AI application development and deployment.

Get started on GitHub here; and access the blueprint on NVIDIA AI. We're excited to support developers and companies building voice agents. As always, let us know how we can help.